Motivation

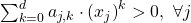

Most modern machine learning algorithms are all based on the same feature weighing framework, i.e. ![]() . In order to capture the possible inter-relationship between features, the prevalent way is to expand the feature set

. In order to capture the possible inter-relationship between features, the prevalent way is to expand the feature set ![]() by including interactive terms. However, this process is very ad-hoc, and it depends on a lot of manual trial-errors.

by including interactive terms. However, this process is very ad-hoc, and it depends on a lot of manual trial-errors.

Proposal

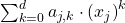

In order to overcome the above plight, I propose a brand new learning framework ![]() , in which

, in which ![]() represents the general polynomial form of one of the

represents the general polynomial form of one of the ![]() features

features ![]() up to

up to ![]() degrees.

degrees. ![]() is a hyperparameter that can be searched incrementally.

is a hyperparameter that can be searched incrementally.

This framework provides a more intuitive learning strategy, which permits the model a much more power to fit the hypothetical relationship between given features.

Derivations of Gradients

Assuming that ![]() and our goal is to minimize their L2 distances, we have our objective function as

and our goal is to minimize their L2 distances, we have our objective function as ![]() . At the optimal, we know that it is equivalent to

. At the optimal, we know that it is equivalent to ![]() .

.

Thus, its first-order derivative is ![]() ; while its second-order derivative is

; while its second-order derivative is ![]() .

.

Unresolved Challenges

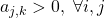

- To make the above derivations, two strong assumptions have to be satisfied:

and

and  .

. - In order to speed up the convergence speed, it is better to use the Newton Method to decide the step size, which requires the Jacobian matrix made of

to be positive definite instead of positive semi-definite.

to be positive definite instead of positive semi-definite. - To improve the calculation precision of floating numbers, it will be more ideal to calculate

in log space then convert the result back to normal space. However, to do so, we need to again make sure

in log space then convert the result back to normal space. However, to do so, we need to again make sure  and

and  .

.

Workarounds

I tried to move the calculation from real number domain to complex number domain, whose calculation are supported by most of the Python libraries. However, I am not sure what each number implies in a complex number domain. What’s worse, sometimes the model’s convergence is still pretty bad.

Attachments

Keywords

Interactive Learning